Microservice authentication and authentication scaling

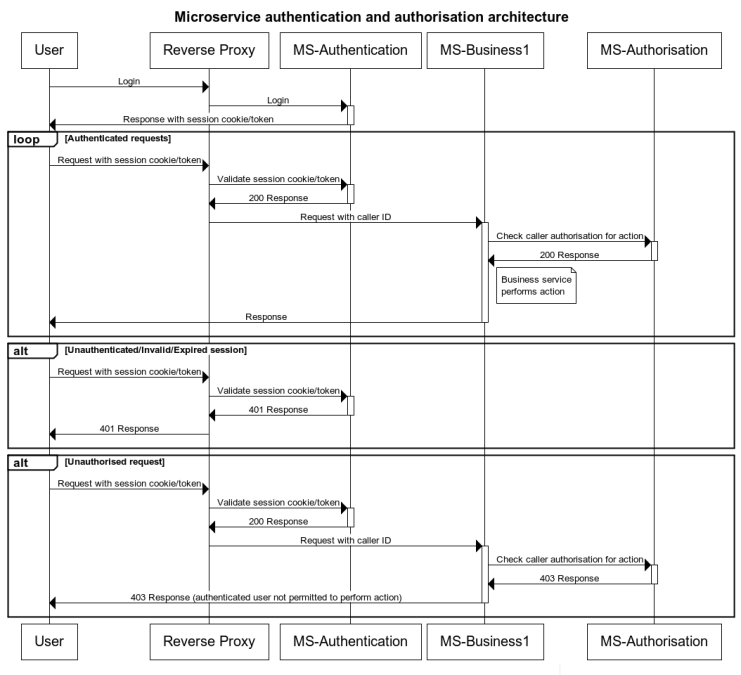

In a previous post I put up the sequence diagram below describing a design for implementing authentication and authorisation using Microservices.

What I didn't cover was the advantages of this approach when scaling your services. Authentication and authorisation are needed by most parts of your system so they easily become a performance bottle neck. Any service in your system which needs to authenticate a user or check their permissions will need to access a central data source holding this data. Outside a monolith architecture (which has it's own problems) this can be difficult, as a varying number of services will need to perform these functions so it needs to scale with them.

This is one of the classic arguments for microservices, as its easier to scale a small focused service doing one thing rather than a large application with many dependencies and data sources.

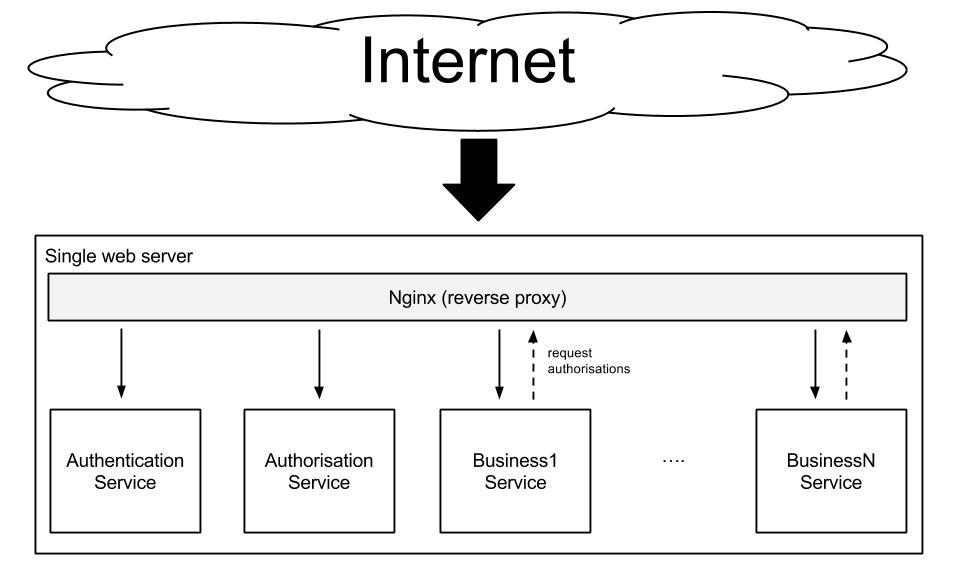

Here's the most basic architecture using the microservice authentication and authorisation design above:

This architecture can only scale vertically, by increasing the specification of the single web server. If just one of the services hosted on the box is getting a lot of requests, like the authorisation box dealing with permission checks from 10 business services, then the performance of the whole application is affected. Increasing the processor and memory can only help you so much in this situation, and of course the system has multiple single points of failure.

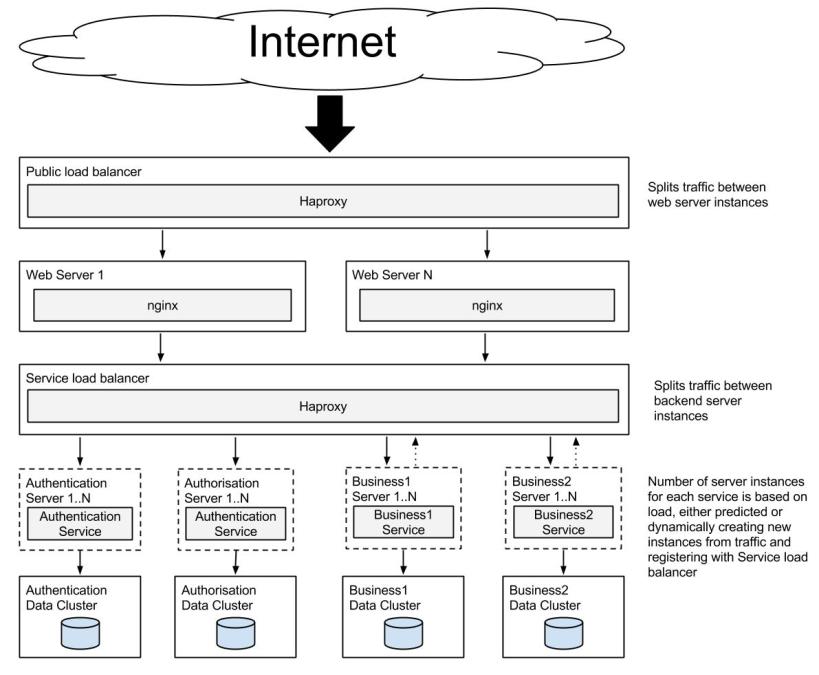

Now here's what is possible if you use load balancers and partition your microservices into separate servers:

This architecture can scale horizontally, by increasing the number of server instances for the specific services that are experiencing heavy load. This may seem overly complex but really if your application needs to scale well this is the only practical way to do it. It can also save hosting costs, since as well as being able to scale up (increase instances) you can scale down (reduce instances) when individual services are not under much load. The costs for a single high spec server on all the time are normally higher than multiple tiny instances being turned on and off automatically.

The tools necessary to implement this architecture are now very mature (haproxy, Puppet, Docker, etc.) and Cloud IaaS providers are offering better tools for managing your instances automatically.

Useful links