Managing data store changes in containers

When creating microservices it's common to keep their persistence stores loosely coupled so that changes to one service do not affect others. Each service should manage it's own concerns, be the owner of retrieving/updating it's data and define how and where it gets it from.

When using a relational database for a store there is an additional problem that each release may require schema/data updates, a known problem of database migration (schema migration/database change management).

There a large number of tools for doing this; flyway, liquibase, DbUp. They allow you to define the schema/data for your service as a series of ordered migration scripts, which can be applied to your database regardless of it's state as a fresh DB or existing one with production data.

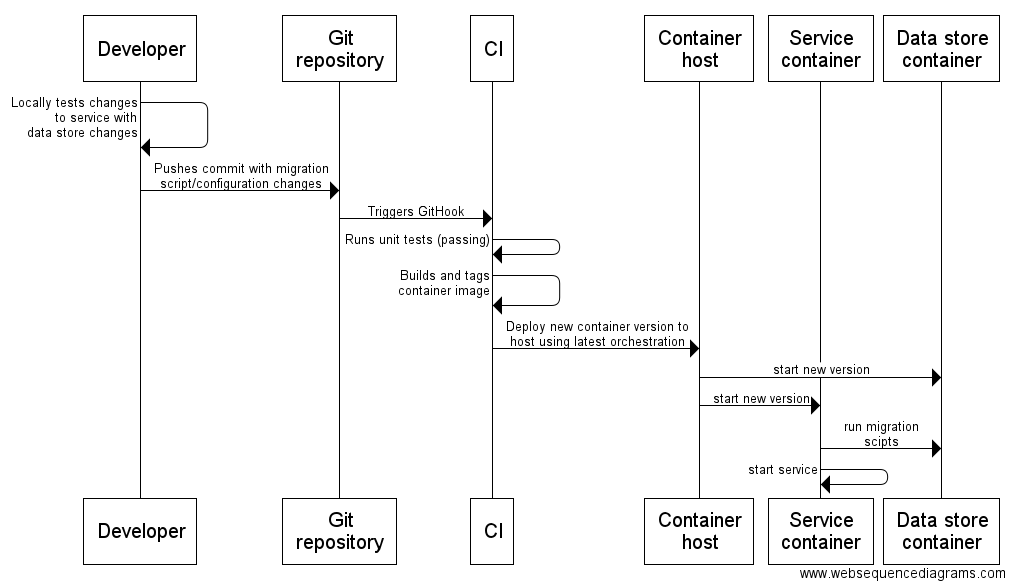

When your container service needs a relational database with a specific schema and you are performing continuous delivery you will need to handle this problem. Traditionally this is handled separately from the service by CI, where a Jenkins/Teamcity task runs the database migration tool before the task to deploy the updated release code for the service. You will have similar problems with containers that require config changes to non-relational stores (redis/mongo etc.).

This is still possible in a containerised deployment, but has disadvantages. Your CI will need knowledge/connection to each containers data store, and run the task for each container with a store. As the number of containers increase this will add more and more complexity into your CI which will need to be aware of all their needs and release changes.

To prevent this from happening the responsibility of updating their persistence store should be on developer for the container itself, as part of the containers definition, code and orchestration details. This allows the developers to define what their persistence store is and how it should be updated each release, leaving CI only responsible for deploying the latest version of the containers.

node_modules/.bin/knex migrate:latest --env developmentAs an example of this I created a simple People API node application and container, which has a dependency on a mysql database with people data. Using Knex for database migration, the source defines the scripts necessary to setup the database or upgrade it to the latest version. The Dockerfile startup command waits for the database to be available then runs the migration before starting the Node application. The containers necessary for the solution and the dependency on mysql are defined and configured in the docker-compose.yml.

docker-compose upFor a web application example I created People Web node application, that wraps the API and displays the results as HTML. It has a docker-compose.yml that spins up containers for mysql, node-people-api (using the latest image pushed to Docker Hub) and itself. As node-people-api manages it's own store inside the container node-people-web doesn't need any knowledge of the migration scripts to setup the mysql database.